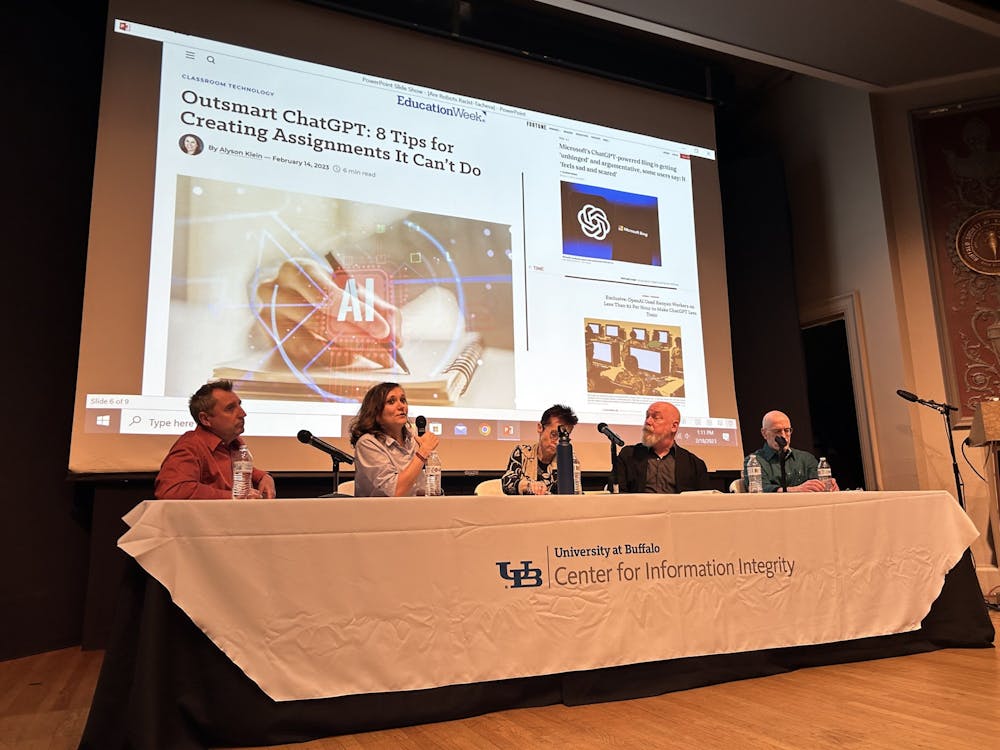

UB’s Center for Information Integrity addressed biases in and misconceptions surrounding artificial intelligence systems at a panel discussion on Feb. 18.

The panel of four professors featured engineering professor E. Bruce Pitman, architecture and media study professor Mark Shepard, comparative literature professor Ewa Plonowska Ziarek and Syracuse University’s Jasmina Tacheva, an information studies professor. The event was moderated by the Center for Information Integrity’s co-director David Castillo.

Pitman kicked off the discussion, explaining that AI are really just “classifiers.”

“In some sense, what they do is look at patterns from what they’ve been trained on,” Pitman said. “You give them a new test pattern, and it tries to make a prediction. ‘Does this fit into class one or class two of what I’ve seen before?’ That’s all it does.”

Shepard added that AI is trained on a large “corpus text” and is designed to learn from public data. The system becomes a reflection of what it has been and needs to be “explicitly taught.” For systems like Chat GPT, data can be pulled from spaces like Reddit, blogs and other media platforms. AI will search these sources looking for “natural language context” and attempt to understand what words are “nested” near input words.

Shepard notes this was the type of algorithm used to create the AI-generated sitcom “Nothing, Forever” — a spinoff of the of the 90s sitcom “Seinfeld” — that streamed on Twitch. But what resulted from this show was more than just the daily shenanigans of Jerry Seinfeld. On a scene broadcasted on Feb. 6, Seinfeld’s character suddenly outburst into a bigoted tirade. Twitch ultimately banned the show’s broadcasting.

Panelists discussed similar occurrences that took place with other systems such as Tay.ai and Bing’s AI chatbot.

Shepard said “Nothing, Forever” was a textbook example of an AI software malfunction — in a sense. The AI did exactly what it was programmed to do, without knowing what was appropriate. “AI simply remixes and regurgitates human sentences, assessing random bits of language,” Shepard said. “In this sense, it’s a little bit like pulling up a mirror to our culture.”

What people find staring back at them can sometimes be racism, misogyny and bigotry, sentiments that AIs, based on their training, predict will appeal to the majority.

“The most radical views get the most attention, when they are attacking someone else, and they are not constructive,” Ziarek said. “Extremist views or anger about extremists: that’s what most engages us.”

Tacheva added that this draw toward extremism online helped radicalize the Tops shooter, resulting in the murders of 10 Black Buffalonians on May 14. “There are places on the internet, pockets really, where this discourse is encouraged, where our young people are drawn to,” Tacheva said.

She added that while AI is new, it’s built on “historical systems” and progression of “colonialism, racism and capitalism.”

That doesn’t make AI inherently bad, but it forces people to not see AI as completely removed from contemporary culture.

Pitman elaborated further on that idea, explaining that AI has substantially improved our navigation, healthcare and finance systems. But AI systems can be susceptible to misinformation, whether they’re designed to check for suspicious financial transactions or identify cancerous growths. That leaves room for implicit biases within these algorithmic systems.

“That should be a big warning sign for us as users of these AI systems,” Pitman says.

But Tacheva says that it’s not about whether artificial intelligence is good or bad. Instead, we need to focus on “re-envisioning the entire process of technology together in a collective form.”

Rachel Galet, a junior and media studies major who attended the panel, says she learned a lot from the discussion.

“I definitely think this is something we should be concerned about,” she said. “They mentioned a lot of stuff that I didn’t know was going on, and most people don’t know how these systems are actually working… Especially for somebody that is going to this space, it makes me think critically about how we can use this for good and not use this as a system of oppression.”

Suha Chowdhury is an assistant news editor and can be reached at suha.chowdhury@ubspectrum.com